On Azure

The Azure Kubernetes Service (AKS) is one of the Azure services used for deploying, managing, and scaling any distributed and containerized workloads. Here we can provision the AKS cluster on Azure from the ground up and using an automated way (infra-as-code) using terraform and then deploy the DIGIT-iFIX Services config-as-code using Helm.

This quickstart assumes a basic understanding of Kubernetes concepts. For more information, see Kubernetes core concepts for Azure Kubernetes Service (AKS).

If you don't have an Azure subscription, create a free account before you begin.

Pre-requisites

Use the Bash environment in Azure Cloud Shell.

If you prefer, install the Azure CLI to run CLI reference commands.

If you're using a local installation, sign in to the Azure CLI by using the az login command. To finish the authentication process, follow the steps displayed in your terminal. For additional sign-in options, see Sign in with the Azure CLI.

When you're prompted, install Azure CLI extensions on first use. For more information about extensions, see Use extensions with the Azure CLI.

Run az version to find the version and dependent libraries that are installed. To upgrade to the latest version, run az upgrade.

This article requires version 2.0.64 or greater of the Azure CLI. If using Azure Cloud Shell, the latest version is already installed.

The identity you are using to create your cluster has the appropriate minimum permissions. For more details on access and identity for AKS, see Access and identity options for Azure Kubernetes Service (AKS).

Install kubectl on your local machine that helps you interact with the kubernetes cluster

Install Helm that helps you package the services along with the configurations, envs, secrets, etc into a kubernetes manifests

Install terraform version (0.14.10) for the Infra-as-code (IaC) to provision cloud resources as code and with desired resource graph and also it helps to destroy the cluster at one go.

Note: Run the commands as administrator if you plan to run the commands in this quickstart locally instead of in Azure Cloud Shell.

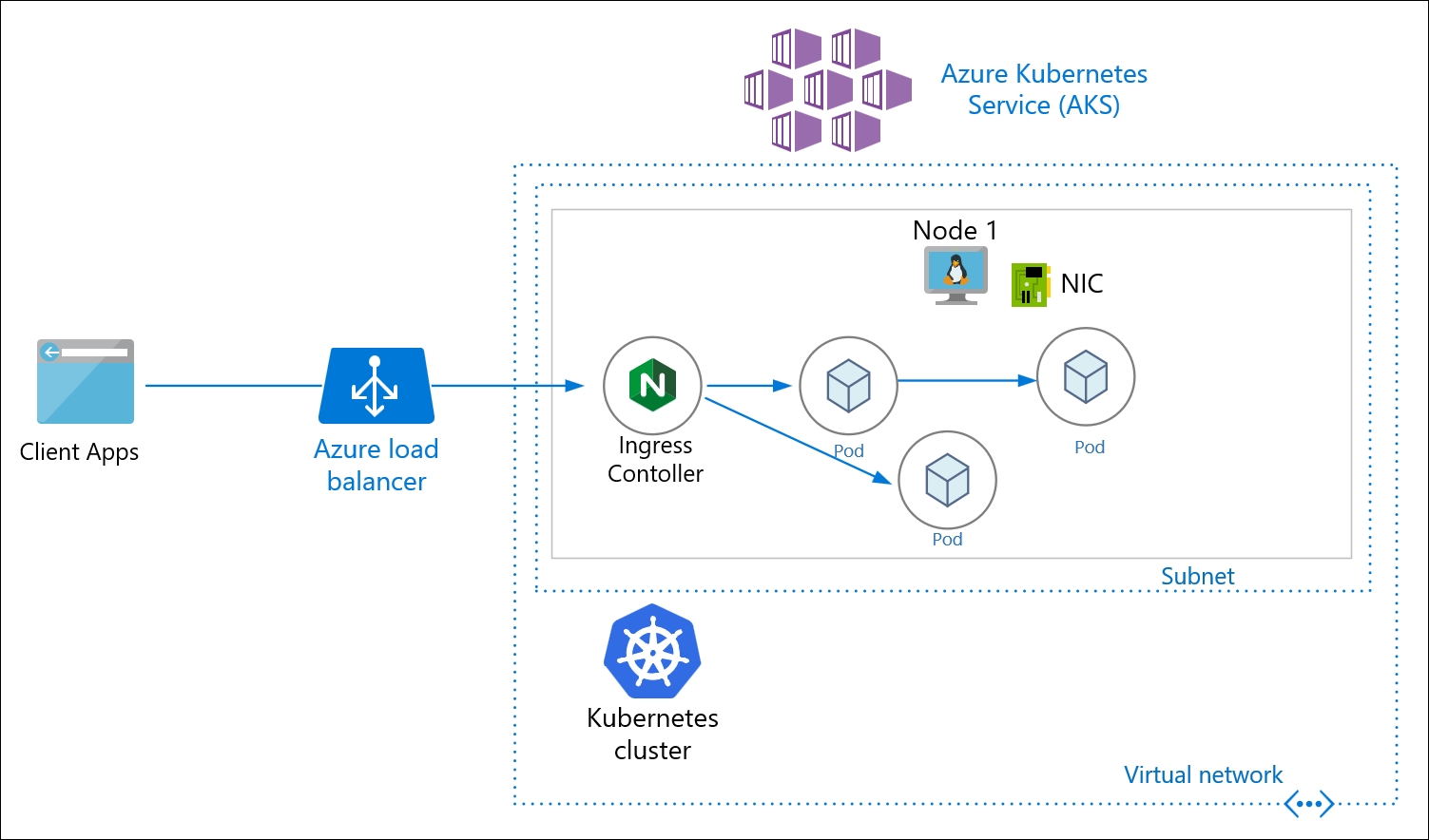

AKS Architecture

Before we provision the cloud resources, we need to understand and be sure about what resources need to be provisioned by Terraform to deploy DIGIT. The following picture shows the various key components. (AKS, Worker Nodes, Postgres DB, Volumes, Load Balancer)

AKS Architecture For iFIX Setup

Considering the above deployment architecture, the following is the resource graph that we are going to provision using Terraform in a standard way so that every time and for every env, it'll have the same infra.

AKS Azure (Kubernetes Service Master)

Work node group (VMs with the estimated number of vCPUs, Memory

Volumes (persistent volumes)

PostgreSQL Database

Virtual Network

Users to access, deploy and read-only

Understand the Resource Graph In Terraform Script

Ideally, one would write the terraform script from scratch using this doc.

Here we have already written the terraform script that provisions the production-grade DIGIT Infra and can be customized with the specified configuration.

Let's clone the iFix-DevOps GitHub repo where the terraform script to provision the AKS cluster is available and below is the structure of the files.

The following main.tf contains the detailed resource definitions that need to be provisioned, please have a look at it.

Dir: iFix-DevOps/Infra-as-code/terraform/aks-ifix-dev

Custom Variables/Configurations

You can define your configurations in variables.tf and provide the environment-specific cloud requirements so that using the same terraform template you can customize the configurations.

Following are the values that you need to mention in the following files, the blank ones will be prompted for inputs while execution.

variables.tf

Run Terraform

Now that we know what the terraform script does, the resources graph that it provisions and what custom values should be given with respect to your env.

Let's begin to run the terraform scripts to provision infra required to Deploy DIGIT on AZ.

First CD into the following directory, run the following command 1-by-1 and watch the output closely.

Upon Successful execution following resources get created which can be verified by the command "terraform output"

Network: Virtual Network.

AKS cluster: with nodepool(s), master(s) & worker node(s).

Storage(s): for es-master, es-data-v1, es-master-infra, es-data-infra-v1, zookeeper, kafka, kafka-infra.

Connect To The Cluster

To manage a Kubernetes cluster, use the Kubernetes command-line client, kubectl. kubectl is already installed if you use Azure Cloud Shell.

Install

kubectllocally using the az aks install-cli command:Configure

kubectlto connect to your Kubernetes cluster using the az aks get-credentials command. The following command:Downloads credentials and configures the Kubernetes CLI to use them.

Uses

~/.kube/config, the default location for the Kubernetes configuration file. Specify a different location for your Kubernetes configuration file using --file.

3. Finally, verify that you can connect to the cluster by running the following command

All set and now you can go with Deploy Product.

Last updated

Was this helpful?